Picking up something is not as simple as we usually see. At least for robots, it is not simple. Robotics experts want to invent a robot that can pick up anything, but for now almost all robots will only “blindly scratch†where they’re struggling to catch things. Even if the shape, texture, or position of the object has changed, the robot will not react, so each attempt will fail.

Picking up something is not as simple as we usually see. At least for robots, it is not simple. Robotics experts want to invent a robot that can pick up anything, but for now almost all robots will only “blindly scratch†where they’re struggling to catch things. Even if the shape, texture, or position of the object has changed, the robot will not react, so each attempt will fail.

We have a long way to go to get a perfect grasp of things from the robot once. Why is it so difficult to do this task? Because when people try to catch things, they use multiple senses, mainly including sight and touch. However, at present, many robots only use vision to grasp things.

In fact, people's ability to grasp things is not entirely dependent on vision, although vision is very important to the object (when you want to aim at the right object), but the vision can not tell you everything about the object. Think of how Steven Pinker describes people's tactile senses: “Imagine you pick up a milk box. You hold it too loose and the box will fall. You hold it too tight and squeeze the milk in the box. Come out; you shake gently, and you can even estimate how much milk is in the box by feeling the traction on your finger,†he writes in his book How Mind Works. Because robots do not have those sensory abilities, humans still flung them a few streets in the task of picking up and dropping objects.

As the leader of the CoRo laboratory at Bordeaux Institute of Technology in Montreal, Canada, and the co-founder of robotic company Robotiq in Quebec City, the author has studied the important development of the method of grasping for a long time. At present, the author believes that the attention of all walks of life to robot vision is not the core issue that can achieve perfect grasp. In addition to the vision, there is another thing that promotes the development of robotic grip: haptic intelligence.

Previous research focused on visual rather than tactile intelligence

At present, many researches on robot grabbing have focused on building intelligence around visual feedback. Database image matching is one of the ways to build intelligence, which is also the method used by the Humans to Robots laboratory at Brown University in the Million Objects Challenge. Their idea is to let the robot use the camera to find the target and dominate the movement and grab it. In this process, robots compare the information they obtain in real time with the 3D images stored in the database. Once the robot finds a match, it can find a calculation program that can handle the current situation.

Although Brown University collects visual data for various objects, robotic experts do not necessarily create every item in the visual database for the different situations that each robot may encounter. In addition, there is no environmental limitation in the database matching method, so it does not allow the robot to adjust the grabbing strategy to adapt to different environments.

Other scientists have begun to study robot learning techniques in order to improve the robot's ability to grasp objects. These technologies allow robots to learn from their own experiences, so in the end, robots can find the best way to grab objects themselves. In addition, unlike database matching methods, machine learning does not require the creation of an image database in advance. They only require more practice.

As previously reported by IEEE Spectrum, Google recently conducted a grabbing technology experiment that combines visual systems and machine learning. In the past, scientists tried to use the robots to adopt the methods that humans felt were the best to improve their ability to grasp. Google’s biggest breakthrough was to demonstrate to robots that they can use convolutional neural networks, visual systems, and data from more than 80,000 grabbing moves to teach themselves how to grasp things through the knowledge they have learned from past experiences.

Their prospect does not seem particularly bright: because the robot's response is not pre-programmed, as one of the scientists said, all their progress can be said to be "learned from learning." But vision can tell robots that there are only a few things to grasp, and Google may have reached the forefront of this technology.

Focus only on some of the problems that the vision brings

Why is it difficult for Google and other scientists to solve problems through a single vision? The author concludes that there are probably the following three reasons. First, vision is limited by technology. Even the most advanced vision systems will fail in identifying objects under certain lighting conditions (such as transparent, reflective, low-contrast colors). When objects are too thin, recognition can be blocked.

Second, in many scratch scenes, the entire object cannot be seen, so it is difficult to provide all the information the robot needs. If a robot tries to pick up a wooden clock from the table, a simple vision system can only detect the upper part of the bell. If objects are taken from the box, more objects are involved, and the surrounding objects may obscure some or all of the objects.

The last and most important thing is that the vision does not conform to the essence of the matter: the objects need contact and power, and these are not subject to visual control. In the best case, the vision can let the robot know the shape of the fingers that can make the grab action successful, but in the end the robot needs the information of the touchability to let them know the physical value of the grab.

Tactile Intelligence presents best assists

Tactile plays a central role in human grasping and controlling actions. For amputees who have lost their hands, their greatest confusion is that they do not feel what they are touching when they use prosthetics. Without a sense of touch, amputees need to be close to the target while they are grabbing and controlling things, and a healthy person doesn't even need to look at it when he or she has something to lose.

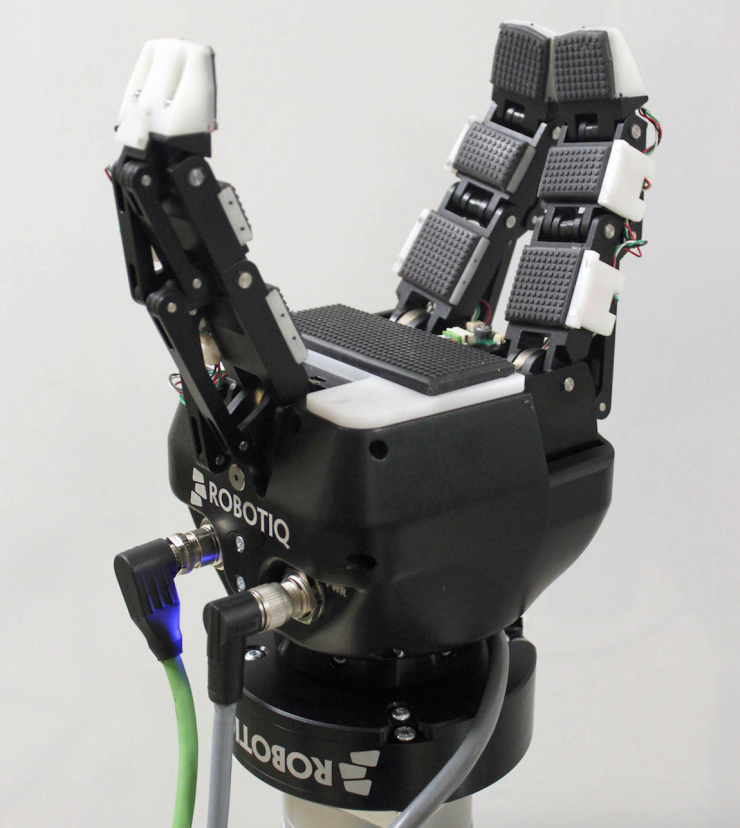

At present, scientists are aware of the important role of tactile sensors in the process of grasping objects. For the past three decades, they have been trying to replace human organs with touch sensors. However, the information sent by the haptic sensors is very complex and high-dimensional, and adding sensors to robots does not directly improve their ability to grasp. What we need is a way to turn unprocessed low-level data into advanced information, thereby improving the ability to grasp and control things. Tactile intelligence can be used to touch, identify the object's sliding and position the object so that the robot can predict whether the object can succeed.

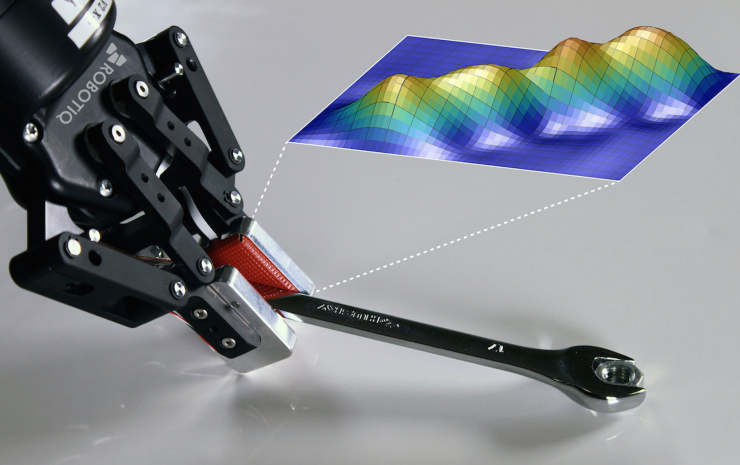

In the CoRo laboratory of the Polytechnic Institute of Bordeaux, the author and his colleagues are developing the core part of tactile intelligence. The latest result is a robotic learning algorithm that uses compression images to predict the success of the grab. This system was developed by Deen Cockburn and Jean-Philippe Roberge to make the robot more human. Of course, humans have learned to judge whether a grip is successful by tactile and observing the shape of the finger. Then we change the shape of the fingers until we have enough confidence in the success of the grab. Before robots learn how to quickly adapt finger patterns, they need to better predict the results of the grasp.

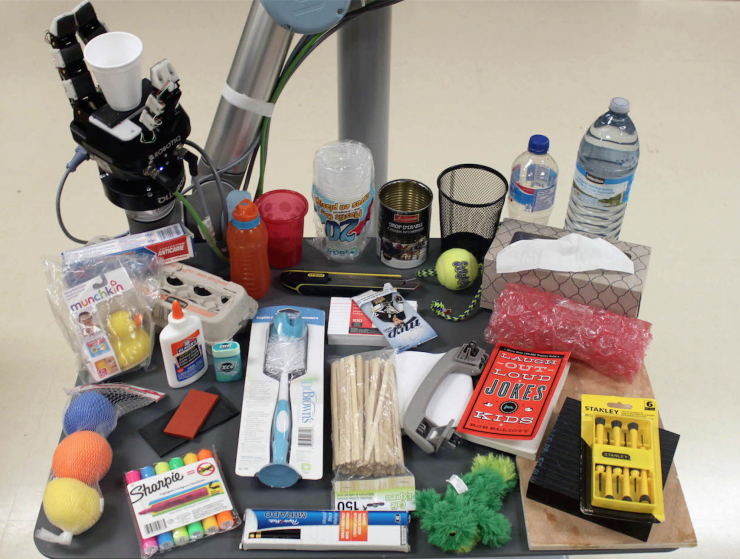

This is why the author believes that CoRo Lab will reach its peak. Combining Robotiq robots with Universal Robots' UR10 controllers, and adding other multi-mode homes and tactile sensors based on the Kinect vision system (only aiming at the geometric center of each object), the resulting robots can be picked up A lot of things, and use the middle of the data to achieve self-learning. Finally, the author and colleagues successfully created a system that can accurately predict 83% of the grab movements.

This is why the author believes that CoRo Lab will reach its peak. Combining Robotiq robots with Universal Robots' UR10 controllers, and adding other multi-mode homes and tactile sensors based on the Kinect vision system (only aiming at the geometric center of each object), the resulting robots can be picked up A lot of things, and use the middle of the data to achieve self-learning. Finally, the author and colleagues successfully created a system that can accurately predict 83% of the grab movements.

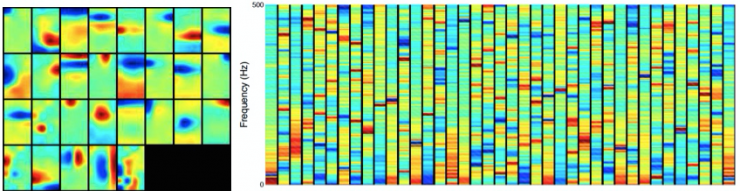

Another team at CoRo Lab led by Jean-Philippe Roberge, focused on sliding monitoring. During the grab, humans can quickly detect the sliding of the object because there is a highly adaptable mechanical stimulator on the finger, which is a rapidly changing sensor on the skin that can sense pressure and vibration. Due to the vibration of the hand surface caused by the sliding of the object, the scientists put the image (spectra) of the vibration instead of the pressure image into the machine learning algorithm. Using the same robots as in the grab prediction experiments, their system can learn the features in the vibration image associated with the object's sliding, which identifies the object's sliding accuracy as high as 92%.

Another team at CoRo Lab led by Jean-Philippe Roberge, focused on sliding monitoring. During the grab, humans can quickly detect the sliding of the object because there is a highly adaptable mechanical stimulator on the finger, which is a rapidly changing sensor on the skin that can sense pressure and vibration. Due to the vibration of the hand surface caused by the sliding of the object, the scientists put the image (spectra) of the vibration instead of the pressure image into the machine learning algorithm. Using the same robots as in the grab prediction experiments, their system can learn the features in the vibration image associated with the object's sliding, which identifies the object's sliding accuracy as high as 92%.

It may seem simple to let the robot notice that the object slides because the sliding is just a series of vibrations. However, how can the robot tell the vibrations caused by the object slipping out of the palm of the robot and the vibration caused by the robot dragging the object on the surface of the object (such as a table)? Do not forget that the movement of the robot arm also causes some slight vibrations. Three different actions will emit the same signal, but the robot needs to respond differently. Therefore, robots need to learn to distinguish between different actions.

In terms of machine learning, the two CoRo teams reached a little consensus: they could not impose manual features on robot learning algorithms. In other words, the system cannot rely on scientists' guesses, but should let the robot decide for itself what is important when it comes to screening (or predicting the results of the grab and predicting the grab).

Previously, "advanced features" were created artificially, which meant that scientists would select features that they thought could help the robot identify different types of objects that slide (or determine if the grasping action is perfect). For example, they might associate a robot with the pressure on the top of the object just like a losing grip. But letting robots learn by themselves is actually more effective because what scientists think is not necessarily correct.

Sparse coding is very useful here. It is an unsupervised functional learning algorithm that operates by creating sparse dictionaries that represent new data. First, the dictionary is automatically generated by the spectrogram (or unprocessed pressure image) and then input into the sparse coding algorithm, which contains many advanced features. Then, when new data is generated in the next grab action, the dictionary is used as an intermediary for converting new data into representative data, also called sparse vectors. Finally, sparse vectors are grouped into different groups that trigger different vibrations (either in successful or failed grab results).

CoRo Lab is now testing how sparse coding algorithms automatically update so that each grab motion can help the robot to make better predictions. Then, during each grab motion, the robot uses this information to adjust its movements. Ultimately, this research will be the best example of combining tactile and visual intelligence to help robots learn to grab different objects.

The future of tactile intelligence

The key point of this study is that vision should not be abandoned. Vision should still contribute absolute power to the grab. However, now that artificial vision has reached a certain stage of development, it can better focus on developing new directions for haptic intelligence instead of continuing to emphasize the single power of vision.

CoRo Lab's Roberge compares the potential of visual and tactile intelligence with Pareto's 80-20 rule: Since the robotic community has gained the upper hand in 80% of visual intelligence, it can hardly dominate the remaining 20%. Therefore, vision will not play such a big role in controlling things. On the contrary, robotics experts are still struggling with the 80% of the tactile perception. Relatively speaking, doing this 80% will be relatively simple, and this may make a huge contribution to the robot's ability to grasp.

If we use robots to touch and recognize objects and clean up rooms for humans, we still have a long way to go. But when that day really comes, we will sincerely thank those scientists who have worked hard to develop tactile intelligence.

Via spectrum

Communication Cable,Screen Communication Cable,Pair Cable,Intrinsic Safety Cable

Baosheng Science&Technology Innovation Co.,Ltd , https://www.bscables.com