The X86 architecture is a set of computer language instructions executed by a microprocessor. It refers to a standard number abbreviation for an intel general-purpose computer series and also identifies a common set of computer instructions.

X86 is a standard number abbreviation for the Intel general computer family. It also identifies a common set of computer instructions. X has nothing to do with the processor. It is a simple wildcard definition for all *86 systems, for example: i386, 586, Pentium. Since the CPU numbers of early Intel are numbered as 8086 and 80286, since the entire series of CPUs are instruction-compatible, X86 is used to identify the set of instructions used. Today's Pentium, P2, P4, Celeron series It is supported by the X86 command system, so it belongs to the X86 family.

The X86 instruction set was developed by Intel Corporation of the United States for its first 16-bit CPU (i8086). The CPU of the world's first PC, the i8088 (i8086 Lite), was also used by IBM in 1981. The X87 chip series math coprocessor, which is added to the instruction and increased in the computer to improve the floating-point data processing capability, uses the X87 instruction. The X86 instruction set and the X87 instruction set are collectively referred to as the X86 instruction set. Although with the continuous development of CPU technology, Intel has successively developed the updated i80386 and i80486 until today's Pentium 4 (hereafter P4) series, but in order to ensure that the computer can continue to run various applications developed in the past to protect and inherit. Rich software resources, so all CPUs produced by Intel continue to use the X86 instruction set, so its CPU still belongs to the X86 series.

In addition to Intel, AMD and Cyrix have also produced CPUs that can use the X86 instruction set. Since these CPUs can run all kinds of software developed for Intel CPUs, the computer industry will put these CPUs. A CPU compatible product for Intel. Since the Intel X86 series and its compatible CPUs all use the X86 instruction set, today's huge X86 series and compatible CPU lineups are formed. Of course, X86 series CPUs are not used in desktop (portable) computers. Some servers and Apple (Macintosh) machines also use the Alpha 61164 and PowerPC 604e series CPUs of DIGITAL (Digital).

Intel started from 8086, the same CPU architecture used by 286, 386, 486, 586, P1, P2, P3, and P4, collectively referred to as X86.

The logic principle of the X86 architecture CPUFirst, the operation process of the von Loemann system:

1, the history of the CPU will not be pulled, and interested friends can search online.

2, X86CPU is based on the von Loyman architecture system, so basically nothing more than these points:

1. The instruction set and data are represented in binary and mixed in a memory.

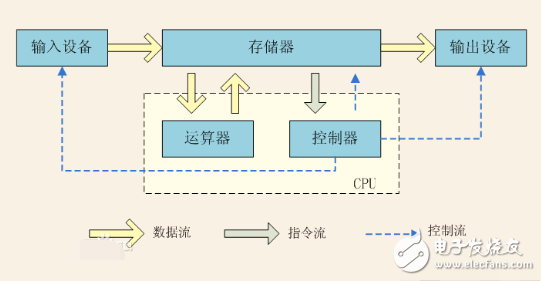

2. The computer consists of an operator, a controller, a memory, an input device, and an output device. PS: cache is different from registers, which are distributed in controllers and operators.

3. The instructions are executed one after the other.

As shown below:

Second, the following discusses several main components:

Register: At the top of the CPU memory pyramid, the smallest capacity and fastest (1-10 instruction cycles). The main role is to store data for the operator to operate. Each has a different function.

Controller: Data register, instruction register, program counter, instruction decoder, timing generator, operation controller.

Operator: The operator consists of an arithmetic logic unit (ALU), an accumulation register, a data buffer register, and a status condition register.

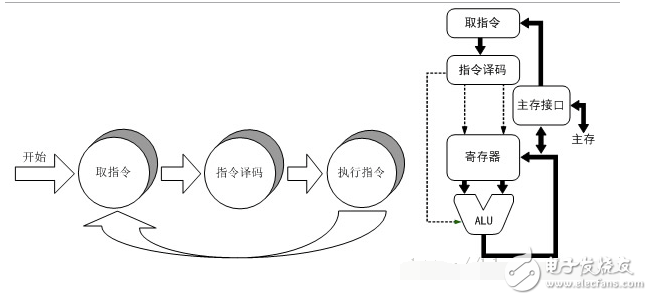

Third, the implementation process:

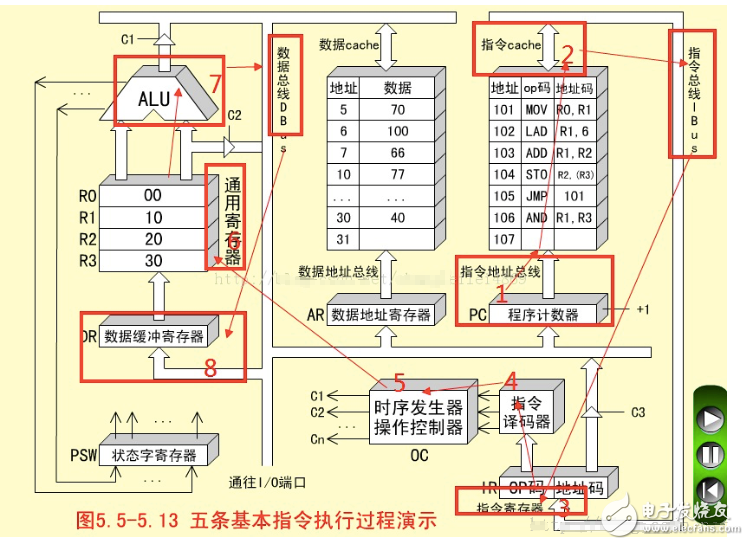

When an instruction is executed, the program counter first records the current address, puts it in the address recorder, and adds one to the program counter (pointing to the address of the next instruction).

The memory read instruction is then placed in the instruction register (IR). Then passed to the instruction decoder, the specific functions are as follows:

Instruction decoder:

(1) Decoding analysis. Determine the operation that the instruction should complete, and generate the control potential for the corresponding operation. To participate in all the control commands (micro-operation control signals) required to form the function of the instruction.

(2) According to the addressing mode (8086 is to use a segment address + offset address to synthesize a 20-bit addressing range, phased out from 32bit) analysis and instruction function requirements, form the effective address of the operand, and take the operation according to this address The number (arithmetic instruction) or the formation of a transfer address (transfer class instruction) to implement program transfer.

The output of the opcode field in the instruction register is the input to the instruction decoder. Once the opcode is decoded, a specific signal can be sent to the operating controller for the specific operation.

Timing generator: The timing signal generator is a component that generates an instruction cycle control timing signal. When the CPU starts fetching instructions and executing an instruction, the operation controller provides a computer by using the sequence of timing pulses generated by the timing signal generator and different pulse intervals. The various micro-operation timing control signals required for each part of the work are organized and rhythmically commanding the various parts of the machine to operate according to the specified time. (A way to distinguish between data and instructions. See the difference in my CPU architecture in detail)

Then pass the instructions to the operator. The register is decoded and the data is cached to obtain data. Then logical operations (OR or NOT) are performed according to requirements, and then transmitted to the outside world through the data buffer register to the IO port.

Operation controller: Common control methods include synchronous control, asynchronous control, and joint control.

1. Synchronous control mode: The execution of any instruction or the execution of each micro-operation in the instruction is controlled by a determined timing signal with a uniform reference time scale. That is, all operations are controlled by a unified clock and completed in standard time. (Under synchronous control, the end of each timing signal means that the scheduled work has been completed, and then the subsequent micro-operations or automatic steering to the next instruction are started.)

2. Asynchronous control mode: There is no unified synchronization signal, and the question and answer mode is used for timing coordination, and the answer of the previous operation is used as the start signal of the next operation.

3. Joint control mode: combines synchronous control and asynchronous control. The general design idea is: adopt synchronous mode or synchronous mode as the control mode inside the functional components; use asynchronous mode between the functional components.

The arithmetic unit is roughly divided into logical operations (OR or NOT) and numerical operations (in the form of addition to addition, subtraction, multiplication and division).

As shown below:

Power Transformer,Dc Transformer,Control Transformer,Small Transformer

Shaoxing AnFu Energy Equipment Co.Ltd , https://www.sxanfu.com